ARCHIE Part One

An Orthogonal Approach to Artificial Intelligence

February, 2025

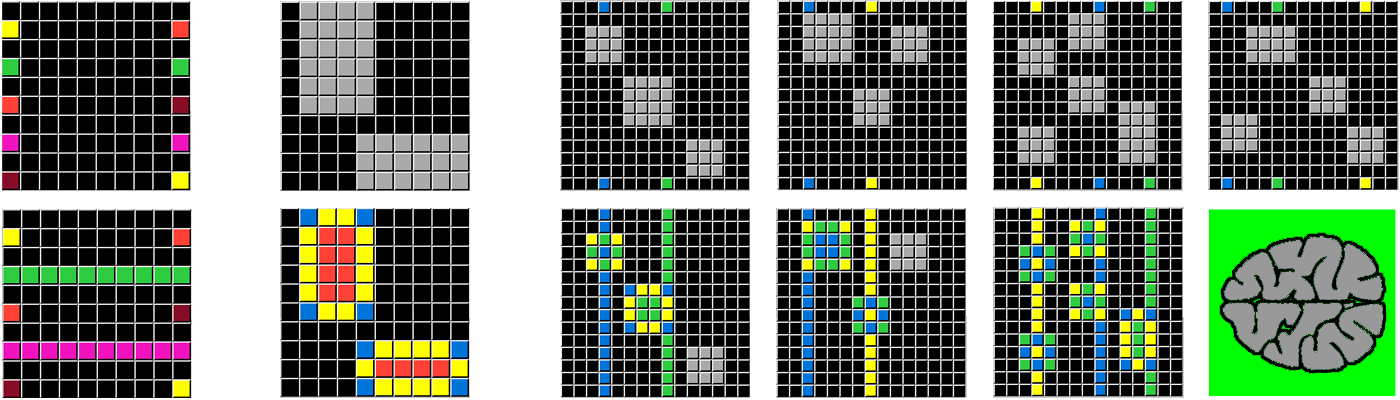

The quest for AGI, Artificial General Intelligence, is this epoch's search for the Philosopher's Stone. More resources are currently pursuing AGI than the previous quests, spurred on by the amazing results we see from Neural Network LLMs like GPT. ARCHIE is an application that competes against LLMs as a method for computerizing Abstract Reasoning.

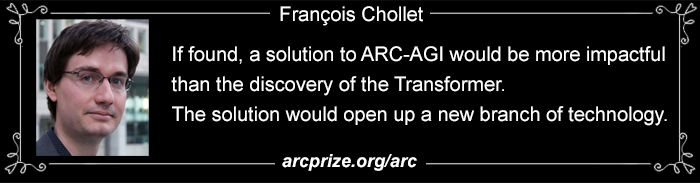

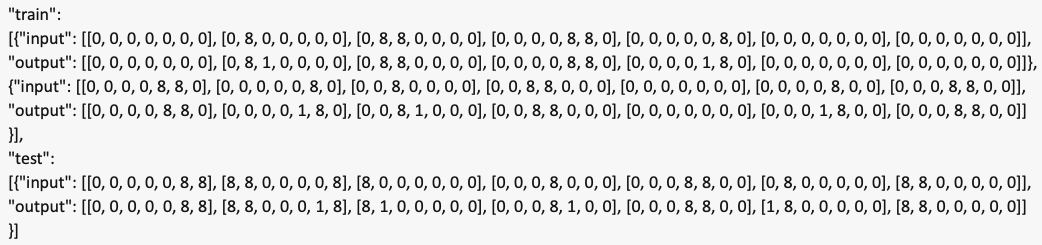

One of the most important tools for building Neural Networks is the Python-based Keras, created by Françios Chollet. Chollet has written several books on Deep Learning, was a Senior Staff Engineer at Google, and is the creator of ARC-AGI. ARC stands for "Abstract Reasoning Corpus" and is like an IQ test for human and non-human intelligence systems.

The ARC "Corpus" is a collection of visual puzzles designed by humans in a way other humans can almost intuitively solve them. They don't require prior cultural knowledge or even language. Supposedly, most of the puzzles can be solved by children. The puzzles are a series of input and output grids, as small as 3 x 3 or as large as 30 x 30. Usually 2 or 3 pairs of input/output "Example" grids are given, and a single input "Test" grid challenges the test taker to answer the appropriate Test output grid. Each position in the grid is populated by a color value, ranging from 0-9. That's it! There is a large prize ($600K sponsored by Mike Knoop and Françios Chollet) for a computer system that can solve 85% of the puzzles in a Private Test set. The Private Test set is hidden so Neural Networks can't be pre-trained to memorize the solutions.

Simple ARC Example:

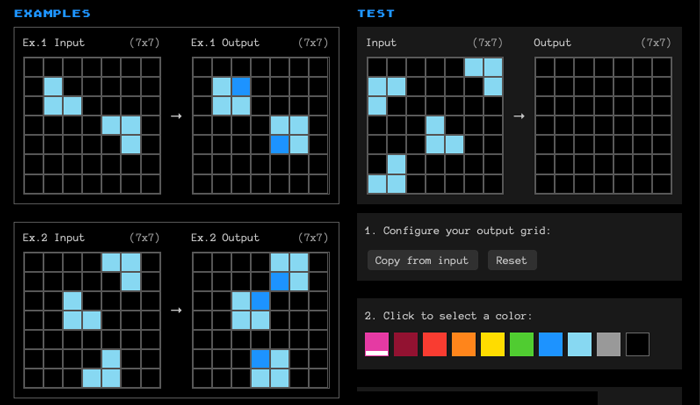

Below is a screenshot of an example test taken from the ARC Prize site itself. There are 200 "Training" puzzles and another 200 harder "Evaluation" puzzles. The terminology is skewed toward how a Neural Network is trained. Regardless, many non-AI enthusiasts work the puzzles just for fun.

In the example, even a child will see what's happening: A darker Blue "pixel" completes the missing corner from the Teal square. There is a "Submit solution" button for feedback on your placements. The Test output grid must be an EXACT MATCH in grid size, color choice, and pixel placement for the solution.

Behind the Scenes

The ARC Prize site page is powered by interactive Javascript. When you are finished with a puzzle you click "Next" and a new puzzle loads on the page. Behind the scenes, a JSON file contains the data needed to populate the page. It also contains (but does not display) the solution. The JSON for the entire puzzle above is this:

If you are writing a program to solve ARC puzzles, you are not interacting with the web page but rather downloading all the JSON files and coding against those. (For the curious, the 0s in the data above represent the Black pixels, 8s render as Teal pixels and 1s are the Blue pixels.)

ARC at UCLA

In the latter part of 2024 Mike Knoop, Bryan Landers, and François Chollet did a tour, presenting the ARC Challenge to Computer Science departments at major US Universities. I was able to wrangle an invite to my alma mater, UCLA, and had a chance to speak with Bryan and Mike directly after their lecture (Françios was unable to attend). There were a couple hundred people present but only a small handful of us had personally worked on the challenge. We discussed philosophy and Mike asked about my approach.

I revealed that I was not using the tools outlined by the contest. I had built a desktop application, utilizing conceptual hierarchies, not a Neural Network. And was I using Curriculum-based training in interacting with my application. I did realize my method would prevent me from entering the monetary competition. But I felt that first solving the challenge was more important. Mike commented "Good luck. You are certainly taking an Orthogonal approach to what everyone else is doing."

An Orthogonal Approach to LLMs, LMMs, and Neural Networks (NN) in General

After the presentation, a friend asked if I had a chance to converse with the sponsors. When I told him of the "Orthogonal" comment he suggested it might have been a veiled criticism. Much of the 2024 presentation was celebrating progress made by brute force approaches. The top of the leaderboard was achieved by a Neural Network generating 2,000 Python programs for every puzzle and selecting the best candidate. It's partial success seemed limited by the expenditure and time limits. The more recent result from OpenAI's best-performing o3 model involved thousands of dollars of computing for each puzzle. Professor Melanie Mitchell posted of OpenAI's latest attempt, "I think solving these tasks by brute-force compute defeats the original purpose [of the ARC Challenge]."

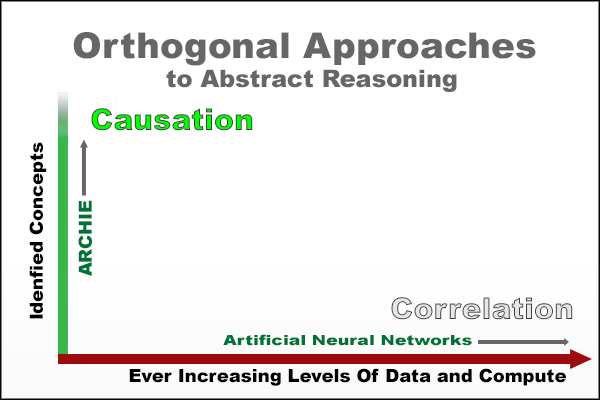

The term "Orthogonal" literally means at right angles. It is frequently used to indicate two things that vary across completely different dimensions. It's an excellent way to illustrate the difference between the big-data/large-compute approach and a conceptually based one.

In his paper On the Measure of Intelligence - 2019 François Chollet writes:

“Solving” any given task with beyond-human level performance by leveraging either unlimited priors or unlimited data does not bring us any closer to broad AI or general AI..."

The "priors" Chollet references are what he calls the "human cognitive priors." The ARC representations are intended to rely on these innate priors as the key to solving the puzzle. Utilizing "Cognitive Priors" to solve the puzzles is the main draw for me, as looking at a puzzle and somehow instantly recognizing the answer, but not having any notion of how to program the same solution, reveals a tremendous amount about the functioning of the human mind. It also requires a serious "metacognition" approach, a deep and specific analysis of your own thought process.

Because ARCHIE is not using an Artificial Neural Network to "learn" a generalized way to solve ARC puzzles, I suspect many will consider this effort to be another exercise in brittle one-off programming. A favorite example of AI researchers is the program designed to play the video game "Breakout." It easily outperformed even the best human players. However, when the position of the paddle was shifted upward only a few pixels on the screen it performed disastrously. This effect is not limited to programs specifically designed for a task. A famous example of Convolutional Neural Network (CNN) mis-performance involved adding a single pixel on various Training images, and the CNN ended up using those to identify each image and not the object data that any sentient creature would use. A massive drawback of Artificial Neural Networks (ANNs) is their "Black Box" methodology for solving problems. I was able to reveal the mathematical function developed by an ANN I built from scratch, and knowing how it worked compelled me to NOT use a Neural Network for this particular problem. You can read about "Black Boxes: Inside the Mind of a Neural Network" on this site.

Three Types of Abstraction Puzzles

I can think of at least 3 major categories of abstract prediction puzzles. The first I wrote about in another article on the site titled: The Numbers. In The Numbers, I introduce the "complete the ongoing series" type puzzle. Examples might be like "1,3,5,7,9,?" where the last element is 11 because these are increasing odd numbers. A non-numeric puzzle might be "M,T,W,T,F,S,?" where the missing element is "S" for Sunday. Usually, the mechanism for solving these is the recollection of a memorized sequence. Memorization can indicate a cultural component; for example, non-English speakers don't represent days of the week the same way. Non-Human programmatic solutions probably involve pattern matching from stored data.

The second type of puzzle presents an analogy. As an example: abc —> abd; jkl —> jk?

The nice thing about this type of problem is that you can verbalize it, and the listener will quickly understand the problem posed: "If you have the letter sequence 'abc' and it changes to 'abd', how would you change 'jkl' in the same way?"

For her Ph.D. dissertation, Melanie Mitchell worked under Douglass Hofstadter to create the "Copycat" program which specifically explored these types of problems. She has posted a book excerpt explaining Copycat at length here: "The Copycat project: A model of mental fluidity and analogy-making.

The explanation of Copycat's architecture involves a "parallel terraced scan", and concepts like "heat", "pressure", "underlying randomness", "halo"; and Copycat's output is a probability histogram of preferred solutions. The reason for randomness and an answer probability is that no single definitive answer exists. You may expect the answer to abc —> abd; jkl —> jk? is the letter M, since M follows L in the alphabet in the same way D follows C. But another solution might be jkd, since the "underlying rule" could be to place D in the final spot. And consider abc —> abd; xyz—>xy?. Here the solution of placing D in the final spot becomes more appealing since the "next letter of the alphabet" theory kind of falls apart. There is no "next letter." I might consider the final spot to be A where the sequence wraps back on itself. Or perhaps the final spot becomes null, as the sequence advances off the edge of a series. Or maybe it's the left bracket { since that's next in the numeric ASCII sequence, after lowercase z. (This all reminds me a bit of the TV game show "Family Feud" where the challenge is to guess the most likely human responses to a particular question. My ASCII answer would be top 10 with programmers but a big red X with everyone else.)

Before we move onward to the third puzzle type, I would like to underline that Analogy is a supremely powerful intellectual tool. Analogies rarely convey a precise piece of information. The purpose of analogy is to convey a MASSIVE amount of structured information simultaneously, across several modalities of understanding; visual, verbal, and kinesthetic. In teaching Mountain Biking I explain that over rough terrain, riding a mountain bike is not like riding on the road, but is much more like skateboarding, surfing, or snowboarding. If you ride with your weight and control through your feet (and not your seat and handlebars like on the road) you will navigate treacherous surfaces that would otherwise cause you to crash and be injured. This single analogy starts beginners off in an advanced direction, even if they have only witnessed board sports. For experienced boardriders, the analogy is absolutely transformative.

The third type of puzzle is created to test Abstract Reasoning where there is a single, definitive, true answer. The ARC corpus is a collection of these types of visual puzzles. Some of these are "intuitively obvious." However, the best of them will suggest an initial theory about the "underlying rule" which requires updating and refinement as the other examples in the set are analyzed. In the end, there needs to be consensus on a single rule which fits all the presented examples AND that rule can then be applied to render an unequivocal solution. Bonus points if the puzzle suggests a Human Cognitive Prior which feels both intuitive and yet unexpected.

Abstract Reasoning's Relationship with AGI

Very recently I learned OpenAI's definition of AGI. There has long been a struggle to define even INTELLIGENCE itself, and adding the requirements that it is both GENERAL and ARTIFICIAL increases the confusion. Fortunately OpenAI cleared this up: "AGI" will have been achieved at OpenAI when one of its AI models generates at least $100 billion in profits. (reference)

I did not consider that ARCHIE could possibly help OpenAI achieve $100 Billion in profits. But the key term is 'Profit.' If their latest model required a million dollars in compute and still fell short of solving all 400 puzzles, then the "Orthogonal Approach" of ARCHIE's architecture, which solves puzzles "instantly and for free," might just be an invaluable alternative.

ON THE OTHER HAND... I propose the true adherents of Cognitive Science are looking for clues to "Universal Intelligence." How is it possible that single-celled organisms find food, avoid toxic environments, evade predation, and reproduce; all without brains? What can we learn about the biochemical decision-making that predates the first neuron? Are other dynamic physical processes instances on an intelligence continuum?

One of the earliest cited tests of artificial intelligence was Alan Turing's "Imitation Game" reference, often called "The Turing Test."

The release of ChatGPT in 2022 certainly put to rest any question of whether a machine can convincingly imitate a human over text. It's amazing! Technology to extend LLMs for convincing voice conversations is being deployed this year. We will be challenged by what may become "deepfakes" in real-time. So is this AGI?

ELIZA, Pareidolia, and the Theory of Mind

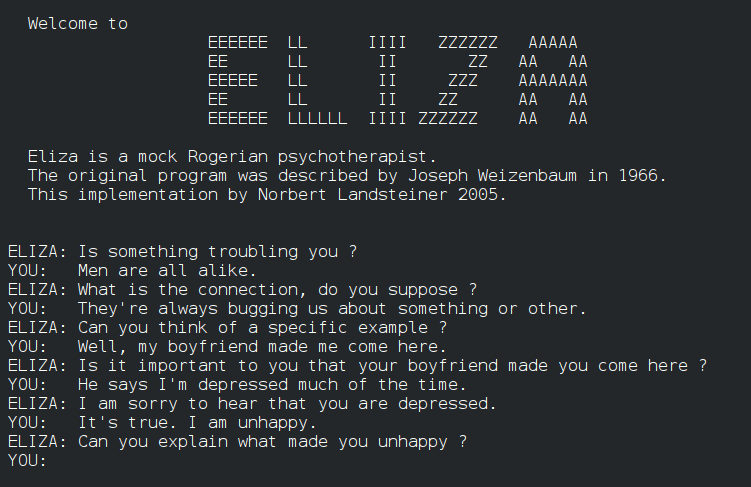

I have had a preparatory advantage when viewing the success of LLM (and LMM) responses. In 1984 the first Macintosh computers were sold and I was a very early adopter. The Mac's graphic interface represented a sea change from the Commodore 64 and all the other text-only computers. Macintosh attracted strong interest from the academic community. Soon many important comp-sci research type programs were released on "shareware" diskettes though local computer clubs. (There would not be a public internet until many years later). Programs I remember being fascinated by included "Conway's Game of Life," "Sharks and Fishes," and especially "ELIZA."

I found ELIZA's responses to my text input fascinating. Of course, I never was fooled into thinking it was a person, but I would try to "play along" and see how long we could keep a sensible conversion going. I would edit impressive sections and print them to show others about "modern progress" in artificial intelligence. What I didn't know then was that ELIZA was created by Joseph Weizenbaum back in 1966.

In my article Pareidolia I discuss the effect of seeing faces in otherwise random patterns, like textured wall coverings, clouds or tree bark. It turns out our brains are wired to see faces, even before the normal visual processing queues have fired. We have evolved to spot faces in chaotic environments, even where they cannot possibly exist. We have likewise evolved a "Theory of Mind," predicting the sentience and intension of others. Psychological experiments demonstrate that when showing simple dots moving around a screen, people will begin to assign intentions like, "That big one is chasing the little one!"

We detect human-type sentience in interactions with computer programs, even as primitive as ELIZA, because we are biologically programmed to seek out other intelligence. And not just any other intelligence, but human-type intelligence, minds we believe are similar to our own. The popular quest for AGI is not a search for universal general intelligence (which is all around us) but rather a desire for SHI: Simulated Human Intelligence. We will ignore non-conforming evidence to find minds like our own, just as we see faces in the clouds.

I've drifted away from the ARC Prize Challenge at this point, but I am trying to buttress an argument against the conclusion that ARCHIE is "just a program" because ARCHIE was not "trained" to correlate the same massive data required by LLMs. ARCHIE was trained with a curriculum to learn causality in the way you would teach your own child.

Unsupervised, Supervised, or Curriculum Learning?

For my Black Boxes article, I created a Feed Forward Artificial Neural Network that classified two-dimensional data into a prediction of Male or Female. The program modified its initial Weight and Bias values by comparing its prediction to the human-assigned classification of each data point. This process of "iterative optimization," aligning predictions with human-assigned values, is an example of "Supervised Learning." Getting humans to assign a classification for every value becomes prohibitively expensive on huge data sets. Consequently, letting the computer assign its own correlations, based on data values alone is "Unsupervised Learning." That might seem impossible until you remember that's how Statistics works. No human intuits a correct "Average" and then has the computer re-assemble existing data around that value. The attributes in statistics come from the data itself.

If we continue the scenario of "teaching your child", unsupervised learning (e.g. about animals in nature) would involve just "sending them out to play" where they would learn about the animals they encounter through personal experience alone. If you accompany the child and point out examples, names and perhaps which animals are friendly or dangerous, that would be supervised learning. A curriculum approach would also involve teaching the biology and taxonomy of animal life. "This is a kitty, kitties are mammals, mammals give birth to live young (not lay eggs like birds), birds and mammals are both vertebrates...". A curriculum is what distinguishes education from mere training. You train a rat to push a lever and receive a treat. You educate a human about agriculture and how to grow food.

Intentional Personification

The name of my original program was "MiniARC" to reflect the humble attempt to learn from the ARC Challenge. As its capabilities expanded I knew outsiders would need personification to accept what it became capable of. Calculators are not considered intelligent despite outperforming humans in a quintessential human task. I also found that demonstrations where ARCHIE immediately solved the puzzle left audiences unimpressed. When I inserted pauses and interim screen updates, human observers could better see the process through which ARCHIE was proceeding. "It's thinking!"

Abstract Reasoning Curriculum's Heuristic Inference Engine (ARCHIE)

ARCHIE is an acronym that is an intentional personification. However, we can break it down and discover that the name is fitting. If we start with "Abstract Reasoning Curriculum's" and realize that the subject is "Curriculum" and "Abstract Reasoning" is the type of Curriculum, then we see the object is the "Engine." So ARCHIE is the Curriculum's Engine. "Heuristic" refers to problem-solving with rules that are only loosely defined, and "Inference" refers to conclusions based on evidence and reasoning. In PART TWO, when we analyze the process by which ARCHIE solves puzzles, the acronym will seem appropriate.

Fluid Generalization or Engineered Solutions

The ARC Prize site repeats concerns about engineers programming solutions in an effort to "game the system." Chollet calls out "unlimited priors and unlimited data" as disqualifying techniques in solving ARC puzzles. This should be of little concern, since ARCHIE utilizes a minimum number of priors and does not accumulate data.

I posit that the "Human Cognitive Priors" I am trying to codify in ARCHIE are equivalent to the axioms of a mathematical system. Axioms are the required starting point and cannot be derived, and in the same way, priors must be coded and cannot be learned by an intelligent system.

What Are We Dealing With at This Point?

Architectural Considerations:

- ARCHIE is a desktop application designed to solve ARC puzzles.

- It produces solutions in a "fast and free" manner.

- The application is self-contained and does not interact over a network.

- It relies on curriculum-based training to acquire additional "human cognitive priors."

- ARCHIE is not memorizing the solutions to any puzzle.

- Each puzzle appears fresh as a novel challenge each time it is attempted.

- The Inference Engine proceeds in a hierarchical fashion.

- ARCHIE is deterministic and oriented to discover 100% of the single right answer.

What's Next?

PART TWO will describe in detail how ARCHIE tackles solving a simple ARC puzzle. This architecture will reveal the basis for its success.