The Boundaries of Objectivity

Exploring a Problem Space with Darren Lott

Kingdom of Mind

Path to AGI ends in a Cornfield October, 2025

By 1957-58, the seeds for the greatest division in AI had already been sewn: Symbolic AI, as exemplified by Newell and Simon's General Problem Solver (1957), and Probabilistic AI, as exemplified by Papert's Perceptrons (1958). By the 1980s, the Symbolic AI movement had stalled, and after a very late start Probabilistic AI has also stalled. Over half a century later, neither approach have even provided definitions for their highest goals: Intelligence and Consciousness. By the end of this article, I will tackle providing a definition for both.

ARCHIE

An Orthogonal Approach to Artificial Intelligence February, 2025

The quest for AGI — Artificial General Intelligence, is this epoch's search for the Philosopher's Stone. More resources are currently pursuing AGI than the previous quests, spurred on by the amazing results we see from Neural Network LLMs like GPT. ARCHIE is an application that competes against LLMs as a method for automating Abstract Reasoning.

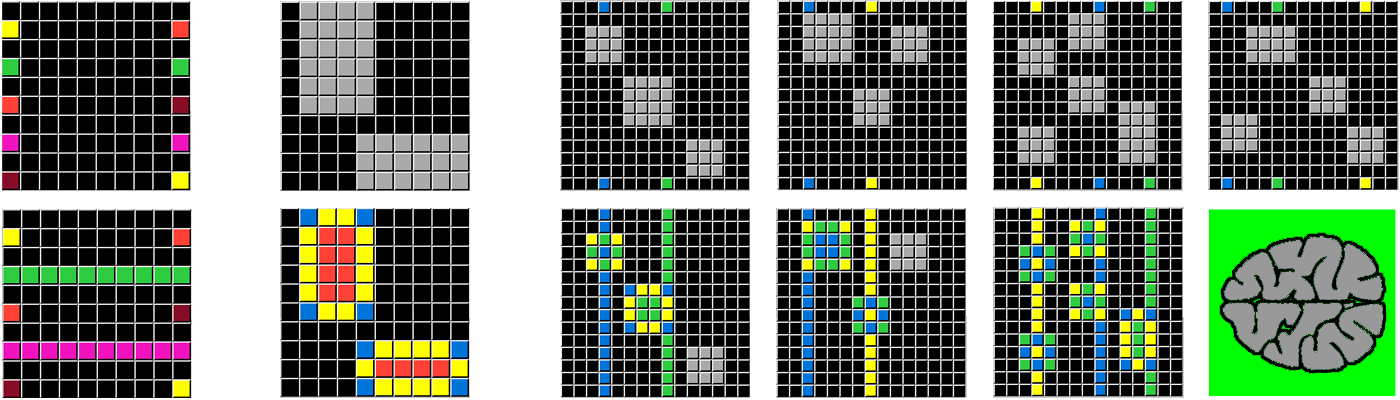

Illusion of Thinking

One Puzzle to Rule Them All December, 2025

Large Reasoning Models (LRMs) claim to use a revolutionary architecture that "reasons" through scenarios, similar to how human problem solvers operate. Apple released a paper ("The Illusion of Thinking") that disputes this claim. The paper concludes by "ultimately raising crucial questions about their true reasoning capabilities." I pushed ARCHIE to see how well it can do on a similar benchmark problem.

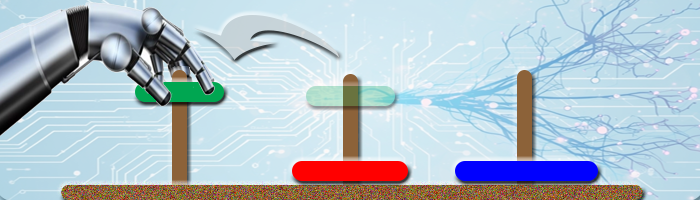

Blocks World

The Foundation of Artificial Intelligence January, 2026

One of the earliest Artificial Intelligence challenges was to manipulate toy blocks using a robot arm. The AI lab at MIT pioneered this work starting in the late 1950s. The simplicity of Blocks World categorizes it as a "toy problem" yet even today it remains another "NP-hard" search and planning problem. After defeating the Tower of Hanoi, can ARCHIE crush this puzzle too?

Speed of Life

Anchoring Orders of Magnitude March, 2024

There are modalities of thinking that don't involve language, but our vocabulary consists of concepts we arrange and rearrange while trying to navigate the world. We know language is the basis for communicating with others, but the dual role of words is often overlooked. When exploring a "thought-space," if we encounter something truly new, we need to create a word for it, to act as a map to find our way back. And to tell others. The language of large numbers is no exception.

Black Boxes Were Made to be Opened...

By Creating a CT Scan of a Neural Network February, 2024

Like Artificial Sweeteners, Artificial Intelligence comes in many forms and flavors. And AI will be as incorporated into our daily lives as Splenda. But can the current incarnation of Machine Learning result in a conscious super-intelligence? One that may present an existential threat to humanity? To dig deep into what's possible I built a simple Neural Network and put it through its paces. The results were surprising.

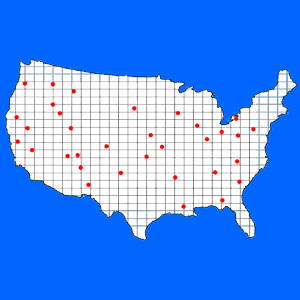

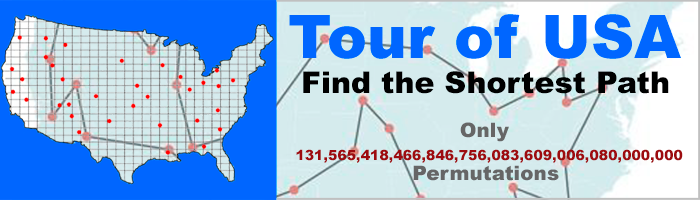

Traveling Salesman Problem

One Milion Dollars if you can Solve it! April, 2024

In 1962 Proctor & Gamble created an advertising campaign, offering a $10,000 prize to whomever submitted the shortest route linking 33 defined US cities. That prize was enough to buy a house at the time. And I missed it.

Finding the shortest route between a set of locations is a class of mathematical problem known as "The Traveling Salesman Problem" or TSP. It is an optimization problem, easily solved when the number of locations is very small, and seemingly impossible with larger sets. In fact, anyone who engineers the "definitive algorithm" for this problem will have solved the P=NP challenge by the Clay Mathematics Institute, and be awarded $1,000,000. Show me the money!

Darren Lott

I graduated from UCLA with a custom major: "The Interrelation of Conceptual Structures." Two years later UCSD established the first "Cognitive Science" department, mirroring my curriculum. That's the Quest: Stay ahead of the game, don't accept common approaches to unsolved problems. Along the way I've made fundamental discoveries. And I would like to share them with you. Are you ready?

Algorithms

Alchemy

Tags

Cognitive Science AI Complexity Machine Learning Neural Network Mathematics Algorithms Alchemy Abstract Reasoning Intelligence Collective Unconscious Epiphany Conceptual Structures SynchronicityIterative Optimization Prime Numbers Paradigms Traveling Salesman Problem Natural Philosophy Heuristics