Kingdom of Mind PART FOUR

Path to AGI ends in a Cornfield

October, 2025

Previously on Kingdom of Mind...

In Part One, we introduced Turing's "Imitation Game," Moravec's Paradox, and Minsky vs HAL 9000.

In Part Two, we introduced a working definition of Intelligence and the Bitter Lesson.

In Part Three, we introduced the Child-King and the Illusion of Sovereignty.

Brain as a Computer

The brain is not a digital computer, at least not as conceptualized by McCulloch and Pitts in 19431. They were the first to publish a paper connecting the on/off nature of biological neurons to electronic circuits. Since then, people have been off to the races with the analogy of the brain as a computer. There are a lot of reasons why this breaks down. Artificial neural networks propagate the amplitude of incoming signals; biological neural networks function through frequency. At the most fundamental level, they don't work the same way. But there are some valid comparisons between computers and brains, and that's because brains designed computers.

This is not a mystical association, but rather a practical one.

One principle is that similar problems spawn nearly identical solutions. In science we see repeat examples such as Newton and Leibniz both inventing Calculus. Darwin and Wallace both proposed the theory of evolution at the same time. The concept of a 'universal computing machine,' now known as the 'Turing Machine,' was proposed by Alan Turing in 1936, and independently by Emil Post, also in... 1936.

Concurrent publishing can raise the spectre of plagiarism. So let's consider Convergent Evolution.

Convergence: They have extremely different ancestors, yet evolved to address very similar constraints.

Rabbits and Hares are commonly mistaken for being closely related, but their difference in chromosome count reflects a taxonomic split 55 million years ago. Viscacha are Rodents that have evolved similar behavior and appearance after descending in the Chinchillas family. Perhaps the greatest 'distance traveled' award goes to the 'Australian Easter Bunny.' The Greater Bilby isn't even a placental mammal, but a marsupial, which carry young in their pouches like the Kangaroo.

A 'Similar Problem' can mean the topic your community deems a worthy goal, or it can mean an environment where, as a prey species, you need long ears and sharp eyesight to determine when to RUN!

The problems facing computer scientists didn't arrive all at once with the Turing Machine. Challenges arose, were solved, and spawned a whole new set of challenges. In asking, "How can a machine solve this problem?," an excellent first approach is "Well, how would I solve it?"

Wondering how you would solve a problem typically means wondering how your brain would solve the problem. For hundreds of years, 'computer' referred to a human being, whose job was to do calculations. 'Computer Memory' is another critical design choice, copied from a solution identified within the human mind. To do computation, you need to remember things.

Functionally, the human mind has had a several hundred thousand-year headstart in developing problem-solving capabilities. When looking to replicate that functionality, the brain is a great place to start.

On the other hand, there is a tendency to replicate solutions at inappropriate levels. Usually that level is too specific, too detailed. The early attempts at human flight almost always involved the liberal use of bird feathers. That seems laughable to us now as NO WORKING SOLUTIONS involve copying bird feathers. And yet billions of dollars are being spent, creating more and more artificial neurons, while woefully ignorant on how the human mind actually works.

"The technology will work. We just need funding for more wings!" Bamboo Butterfly, Richard Miller c 1961

5,000-10,000 years ago, the materials used in ancient sailing vessels (e.g. bamboo for structure and silk or animal skin sailcloth) were sufficient to have constructed a flexible-wing hang glider. What was missing (we assume) was experimentation and a simplified concept of flight (gliding vs flapping).

Alan Turing sidestepped a "simplified concept of the human mind" by taking a functional approach. How do we normally use our "Theory of Mind" to detect other intelligences? Not by matching an existing definition, but rather through conversation. Socrates would approve. And if it quacks like a duck...

Although Turing's famous 1950 paper was "Computing Machinery and Intelligence," the title is about the last time the term 'Intelligence' appears. His formal proposition was "Can machines think?" His mechanism for deciding this question was language based. Through conversation, can a man convince another man that he is a woman? Can a machine convince a man that it also is a man? I have written previously that I consider this goal has been achieved and the milestone for a machine 'thinking' has been surpassed. But is the separate milestone of 'intelligence' merely one of scale? I don't think so. On our scale of "Stupid<—>Dumb<—>Intelligent," speeding up stupid thinking just makes it stupid faster.

Strong Whorfianism

The quote above embodies two theoretical positions in one sentence. "Language shapes the way we think" becomes 'Weak Whorfianism' if we interpret "language shapes" to mean "language influences." I used the 'influences' interpretation in my article "Speed of Life." (The article calls out our trivialization of 'Millions' to 'Billions' through a minimal change in vocalization.) This weak interpretation is considered banal by many.

'Strong Whorfianism' follows the second part of the quote with emphasis on "language determines how we think." Strong Whorfianism is largely disputed by linguists, anthropologists, and cognitive scientists. But it seems to underpin the rationale behind computerized language processing as the basis for "machine thinking."

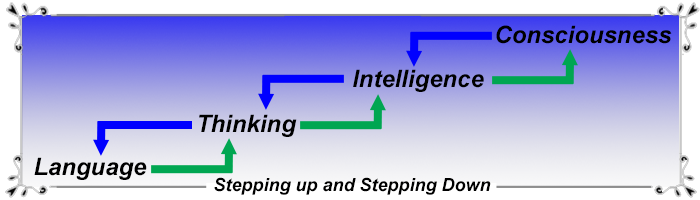

The ascendence of Language to Consciousness is assumed to climb the green arrow-ladder like this:

Artificial Neural Network proponents would propose to first capture the human elements of Language statistically, and from there, Thinking becomes an emergent property. This not only appears to have happened (e.g. Turing Test), but it required a massive scaling effort to have worked at all. GPT-1 was trained on 109 tokens and could (astonishingly) produce simple sentences. GPT-2 utilized a ten-fold increase in parameter count and dataset compared with GPT-1. It was trained on 8 million web pages scraped from high quality Reddit posts (1010 tokens). This increase in scaling meant GPT-2 could reliably produce coherent text up to the single paragraph level. Current GPT's that can now pass the Turing Test are trained on data sets 10,000 times larger than the original 2018 model (1013 tokens)2. So scaling seems to be the answer to many. (But not to me.)

If it took a 10,000 times increase to scale from Language to Thinking, would it take another 10,000 times to reach actual Intelligence? And another 10,000 times that for Artificial Consciousness? If Microsoft requires a dedicated nuclear power plant for artificial Thinking, is anyone serious about an additional 100,000,000 nuclear reactors to "go all the way?"

Let's Try Top Down

Do we want or need to scale up to Artificial Consciousness? If we are happy with machines exhibiting Artificial General Intelligence, maybe we can step down into Intelligence from Consciousness. After all, as 'Thinking Intelligences' ourselves, we can create entirely new forms of Language. But to start at the very top, we would need to know what Consciousness really is. And it's not a singular thing.

In "The Origin of Consciousness in the Breakdown of the Bicameral Mind," Princeton psychologist Julian Jaynes catalogs various theories of Consciousness from the last several centuries. One of these is the 'Helpless Spectator Theory.' This materialist view says evolution created humans as 'conscious automata,' lacking self-determination as with all animals, but with a layer of ineffectual epiphenomena. This position is very contrary to our subjective experience of 'Free Will' and is hardly defensible today. However, as a component, we will carry forward the introspection of being a 'Spectator' of our own Conscious experience

AWOL

After graduating UCLA with a degree in "The Interrelation of Conceptual Structures," I was faced with trying to start a career. "Artificial Intelligence" seemed the closest field anyone could relate to, so I did a literature scan on everything available. After a series of phone interviews, I landed a position with the Navy as an "Artificial Intelligence Scientist" through a program for promising recent graduates. I was to report for work the following week, but I never made it.

What follows is another personal account which taught me lessons about consciousness. The price of tuition was abject bodily horror, and I am reluctant to write about it even decades later. I will try to tone down some of the unnecessary details.

My journey to hell began while riding a three wheel motorcycle and over-jumping the equivalent of a freeway overpass. I could tell I was not going to land on the road at the top as planned, and would instead procede all the way over and down to the bottom. The heavy vehicle was already rotating into a forward flip so I dove off, hoping some kind of somersault landing, and protective gear might save me. But as my body hit the ground hard, the 300 pound vehicle landed on my lower back, bending part of the exposed frame. It didn't matter that I had no ability to breathe; I felt I was cut in half at the waist. "I just killed myself" was the very non-hysterical assessment, pre-recorded from somewhere deep; a creature's biological realization, designed to be played only once.

The cynical reader will realize the author is not dead, and so this could be the re-telling of a near death experience; a glorious "return to the light" after miraculous medical intervention. But that would be wrong.

In PART 5, we will witness the shattering of a human mind and see which pieces of consciousness fall out.

1. "A Logical Calculus of the Ideas Immanent in Nervous Activity," Warren McCulloch, Walter Pitts. (1943)

2. https://epoch.ai/data-insights/dataset-size-trend